Introduction

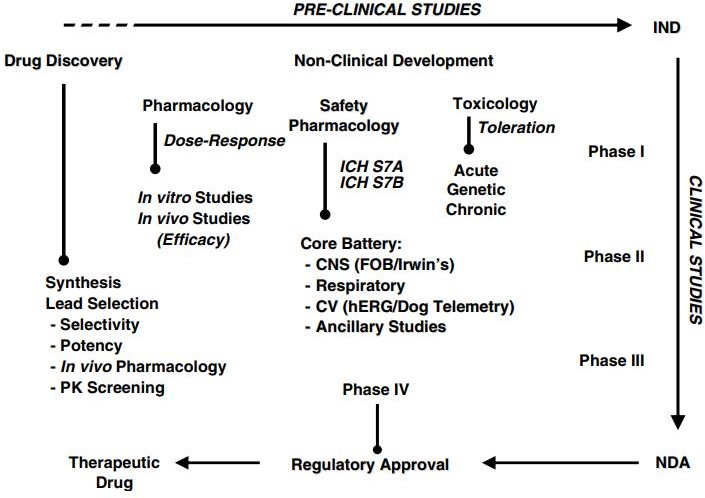

Safety pharmacology is critical throughout the whole drug development process. Safety pharmacology assays, tests, and models estimate the clinical risk profile of a possible new medicine prior to first-in-human investigations. During clinical development, safety pharmacology may be used to investigate and perhaps explain both anticipated and unanticipated side effects (e.g., adverse events, changes in vital signs, aberrant laboratory results) in order to modify the original clinical risk profile.1 Safety pharmacology finds "possible negative pharmacodynamic effects of a chemical on physiological processes in the therapeutic range and above" that are not found by typical non-clinical toxicological research.2 Therefore, safety pharmacology studies are conducted to assure the safety of clinical trial participants in first-in-human (FiH) trials by enhancing the selection of lead candidate medications.2 Efforts to standardise Safety pharmacology research have produced many recommendations from the International Conference on Harmonization (ICH), including as ICH S7A and S7B.3

The primary battery The evaluation of the primary important organ systems, including the cardiovascular system (CVS), central nervous system (CNS), and respiratory system (RS)4 is part of safety pharmacology studies conducted according to good laboratory practise (GLP) standards and ICH recommendations. In addition, additional examinations examining the renal and gastrointestinal (GI) systems as well as other organ-specific follow-up investigations may augment the core battery studies. However, they are optional and their implementation is decided by the nature of the lead candidate medicine being evaluated and the expected adverse effects.4 Prior to FiH trials, safety pharmacology investigations were often conducted during the drug development phase on the identified candidate medicine. Currently, safety pharmacology investigations are initiated earlier in the drug research phase. In addition to analysing and minimising risks associated with the chosen candidate medicine, safety pharmacology studies may now improve lead candidate selection by identifying hazards and eliminating novel chemical entities (NCE) with safety liabilities.5 This study aims to provide a comprehensive overview of both current practises and newer technologies, followed by the emerging concepts in Safety pharmacology studies: frontloading, alternate models, integrated core battery assessments, integration of Safety pharmacology endpoints into regulatory toxicology studies, drug-drug interactions, and translational SP.5

Supplemental Organ Systems and Studies

Gastrointestinal System

During and during drug development, gastrointestinal (GI) problems of varied severity are often seen and are connected with drug-induced morbidity. In addition to nausea, emesis, and constipation, drug-induced GI problems may impact the absorption of other medications.6 To enhance the safety and effectiveness of NCE development, it is essential to frequently examine the impact of the test medication on the GI system.

In accordance with ICH S7A standards, the action of test substances should be evaluated using gastric emptying, intestinal motility, and gastric secretion in suitable animal models.6 Evaluation of GI function is supplemental and, as such, is recommended depending on the understanding of the NCE being examined. Commonly changed GI physiological processes include motility and ulcerations, as well as gastric mucus formation, hydrochloric acid and bicarbonate secretion, which are often seen with prostaglandin E1 analogues and some non-steroidal anti-inflammatory medicines (NSAIDs).6 Commonly, rat models are used to examine the effects of test substances on the gastrointestinal (GI) system by examining gastric emptying, intestinal motility, gastric secretion, and GI damage.

Gastric emptying and intestinal motility

Following administration of the test chemical, gastric emptying and intestinal motility are examined by feeding the animals barium sulphate (BaSO4) or a charcoal test meal.7 The test meals may be employed either as a liquid transport indication (phenol red) or as a solid transport indicator (BaSO4, charcoal). At the chosen time point, preferably near to Cmax, the stomach is taken and weighed since the weight of the stomach is directly proportional to the weight of the gastric content.7 For more accurate findings, it is required to weigh the stomach while full and empty to determine its content weight. Changes in body mass across test groups suggest different gastric emptying. Regarding intestinal motility measures, intestines from the duodenum (to either the ileum or the rectum) are prepared, and the length of the intestine filled with BaSO4 or charcoal from the test meal is calculated in proportion to the length of the whole gut by visual examination. Any difference in the BaSO4/charcoal transit time between the test group and the control group indicates a change in intestinal motility. Using phenol red, any change in the spectral absorbance of particular regions of the intestine (usually 10 sub segments) suggests altered intestinal transit.7

Gastric secretion

Parenteral delivery of the test medication after ligation of the pylorus is used to measure gastric secretion, and the stomach contents are monitored for changes in volume, pH, total acidity, and acid production over time. Typically, gastric secretion tests are conducted after alterations in stomach emptying. Opioid, dopamine, and beta-adrenoceptor agonists significantly impair stomach emptying and intestinal motility.8 However, muscarinic receptor agonists have a tendency to promote gastric emptying, intestinal motility, and gastric secretion, whereas antagonists have the opposite effect. Dr. Sabine Pestel's (Boehringer-Ingelheim Pharma GmbH & Co) unpublished data on 59 test compounds evaluated between 2009 and 2001 revealed a greater incidence and severity on gastric emptying (85% vs. 45%) and intestinal transit (70% vs. 25%) for compounds derived from oncology projects versus non-oncology projects.8 These effects were identified at lower margins in cancer studies compared to non-oncology studies (2–5 versus 10–30-fold on a dose-based scale).8

Newer technology

Visual inspection of the stomach and intestinal system, as well as ulceration index scores, are often used to measure GI harm after delivery of the lead candidate medicine. The use of biomarkers for GI damage is a recent advancement in Safety pharmacology for GI evaluation.8 Biomarkers specific to GI damage, such as blood citrulline, faecal miR-194 and calprotectin, are being investigated and offer potential for use in safety evaluations. Prior to its incorporation in regular Safety pharmacology evaluations, more validation and agreement are required. In addition, the use of the wireless capsule, radiotelemetry, and in-silico (PBPK modelling) in the evaluation and prediction of gastric emptying, intestinal motility, and GI damage is being investigated in order to decrease unnecessary stress on the animals and the number of animals used.

Renal system

Nephrotoxicity, as well as drug-induced changes in renal function, may be underestimated, based on the current evidence from preclinical research and clinical trials.

In addition, unpublished data from Dr. Sabine Pestel (Boehringer-Ingelheim Pharma GmbH & Co) on 99 test compounds evaluated between 2004 and 2011 revealed that nearly 70% of all test compounds exhibited effects on renal function, and close to 50% were indicative of kidney injury based on biomarker changes.8 Consequently, there is a growing need to incorporate routine evaluation of the renal system into Safety pharmacology testing, which can be categorised as altered renal functions (diuresis or anti-diuresis) and organ damage, such as acute kidney injury (AKI), which can include localised injury to glomeruli, renal papillae, and/or different regions of the tubules.9

According to ICH guidelines, assessing renal function by measuring urine volume and electrolyte excretion in rats or dogs as part of SP is supplemental or advised depending on the information collected about the NCE being tested.9 Routinely, urine and serum samples are utilised for clinical chemistry-based assessments to identify drug-induced renal impairment, and isolated organ preparations are performed for further mechanistic research.9 For assessing kidney function, the battery of tests includes measurements of clearance rate, glomerular filtration rate (GFR), urine volume, osmolality, pH, Na+, Cl, K+, creatinine, and urea, as well as serum Na+, Cl, K+, creatinine, and BUN (blood urea nitrogen).

Renal function assessments

GFR, a key metric for evaluating renal function, is computed using both urine and serum samples from animals. Multiple serum samples should not be conducted prior to urine collection, since blood sampling will impact the amount of the urine. Nonetheless, it would be advantageous to have samples from many time periods, since understanding the function requires knowledge of kinetics. Therefore, restricting the number of samples to three during 24 hours would be advantageous without generating any additional disturbance. Therefore, mathematical modelling is employed to extrapolate the collected data to compute GFR, hence enhancing the data's trustworthiness while using fewer animals.9 If the design of the research demands samples from the same species, bigger animals such as dogs may be employed. However, a newly designed integrated pharmacology testing system enables simultaneous measurements of GFR and renal plasma flow in surgically prepared rats. This model testing system effectively integrated BASi Culex® automated blood collection, radiotelemetry, quantitative urinalysis, and nephron site-specific urine biomarkers of damage.9

Clinical chemistry can predict renal toxicity after a single dosage of the test medication. However, the sensitivity is somewhat poor in comparison to NMR-based metabolomics techniques. With improved assessment tools and semi-automatic methods, however, sensitivity might be significantly enhanced.

Kidney injury markers

Injuries to the kidney are also evaluated utilising functional and leakage indicators. Urinary glucose, protein, albumin, calcium, or any other molecule known to be carried in a particular area of the kidney, may serve as functional indicators of kidney damage. As leakage indicators for renal damage, clinical chemistry measures urinary aspartate aminotransferase (AST), alanine aminotransferase (ALT), lactate dehydrogenase (LDH), -glutamyl transferase (GGT), alkaline phosphatase (ALP), and N-acetyl—D-glucosaminidase (-NAG).9 Additional leakage indicators such as kidney injury molecule-1 (KIM-1) and clusterin (CLU) may be evaluated using antibody-based approaches. Due to the high concentration of test drug in the loop of Henle and renal papillae, proximal tubule toxicity is prominent in acute kidney injury (AKI).9 Proximal tubule toxicity is more often related with drug-induced nephrotoxicity.

New technology

Utilizing molecular biomarkers is one of the newest advancements in Safety pharmacology that may boost the depth and breadth of renal toxicity (functional and damage) evaluations. The use of molecular biomarkers increases the prediction of renal toxicity since histological evaluation might result in false-negative results due to the time required for histopathological manifestation after the insult and the location of the section examined (regional bias).10 Therefore, more efficient detection and prediction of region-specific nephrotoxicity requires molecular biomarkers. Recent urine biomarkers for kidney damage that have passed preclinical testing include KIM-1, CLU, albumin, total protein, 2-microglobulin, cystatin C, and trefoil factor 3 (TFF3).10 Due to the possibility that molecular biomarkers may contribute to false positive results, a positive relationship in predicting renal toxicity should be based on information acquired from renal function assessment, histology, and molecular biomarker readout. Recently, NMR and mass spectrometry-based metabolomics techniques to discover known nephrotoxic biomarkers have been investigated.11

Recent and Emerging Concepts

Recent trends to enhance and refine the scope of Safety Pharmacology include a focus on frontloading, the exploration of alternative models, the combination of core battery tests, the integration of Safety pharmacology endpoints into regulatory toxicology endpoints, and the correlation of non-clinical safety endpoints with clinical outcomes. As approaches and methods continue to evolve, safety pharmacology has evolved to contribute to enhanced decision-making throughout drug discovery and development in the selection of lead candidates.12

There is a strong need for the introduction of safety evaluations in the early phases of drug discovery and development, which would assist the ranking of NCEs, leading to the enhanced identification of lead candidates, and eventually reduce the time and costs associated with drug research.13 In safety pharmacology research, the technique of "frontloading" fulfils this need. "Frontloading" is described as "safety investigations undertaken during lead optimisation of compounds before to selecting a candidate medication for development and doing regulatory studies." Prior to initiating in vivo investigations, it is becoming more vital to have a deeper understanding of the tendency of molecules to generate undesirable effects in order to lessen the possibility of drug development termination in later stages.14 Contrary to the core battery tests, frontloading Safety pharmacology investigations are not conducted in conformity with GLP. 15

Conclusion

It is worthwhile to investigate the utility of using alternative models to answer diverse Safety pharmacology problems. Although the origin of the zebrafish model is still debatable, the zebrafish has great promise as a rapid method for early compound screening in all facets of frontloading. Future frequent usage of this model as a frontloading model may arise from more validation of this model in a variety of research. The incorporation of emerging concepts, such as biomarkers and common SP- toxicological endpoints, should occur concurrently with mandatory Safety pharmacology protocols in order to validate the accuracy and reproducibility of these tests, thereby enhancing Safety pharmacology studies and predictive end points for safer therapeutics.